ReEnFP: Detail-Preserving Face Reconstruction

by Encoding Facial Priors

Abstract

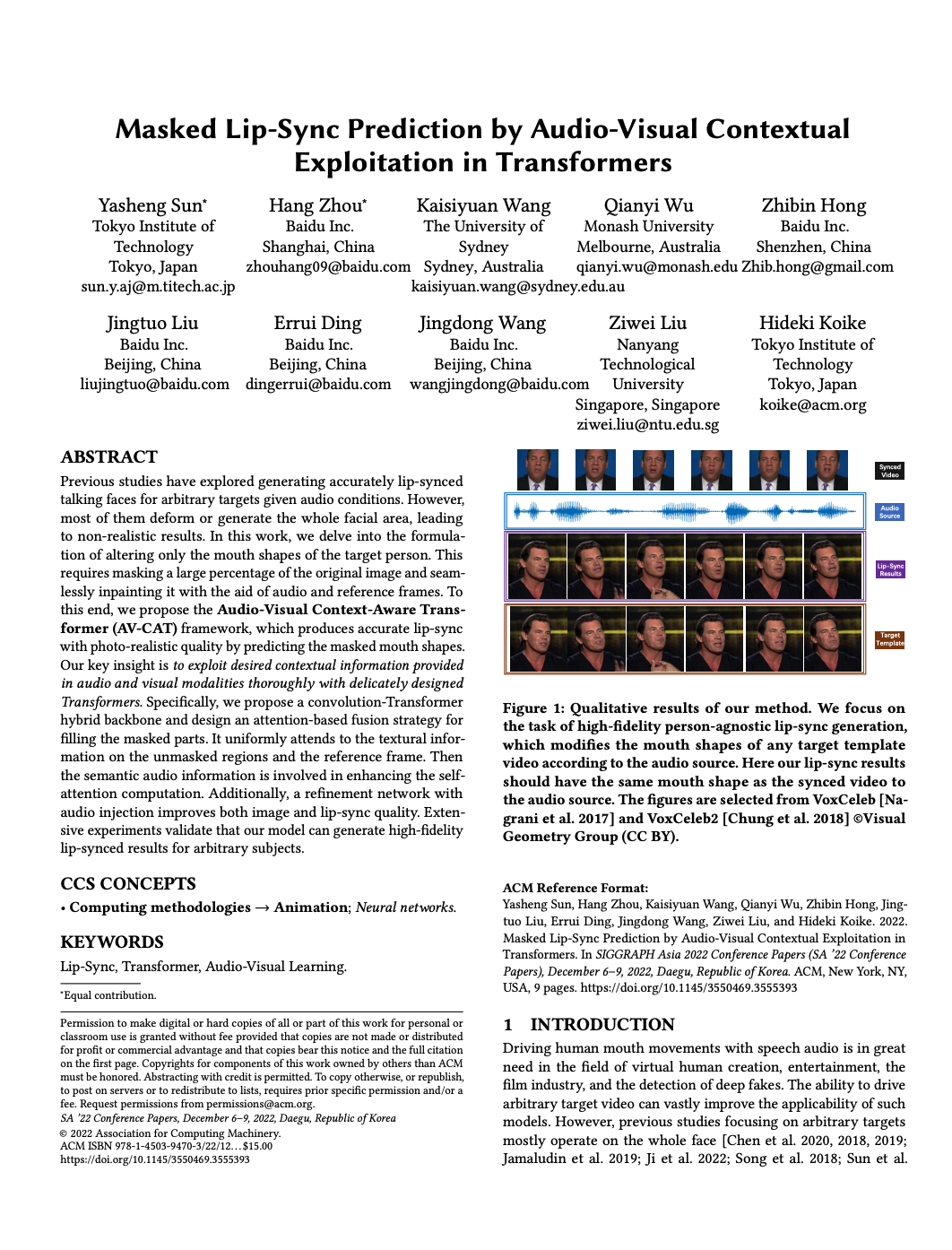

We address the problem of face modeling, which is still challenging in achieving high-quality reconstruction results efficiently. Neither previous regression-based nor optimization-based frameworks could well balance between the facial reconstruction fidelity and efficiency. We notice that the large amount of in-the-wild facial images contain diverse appearance information, however, their underlying knowledge is not fully exploited for face modeling. To this end, we propose our Reconstruction by Encoding Facial Priors (ReEnFP) pipeline to exploit the potential of unconstrained facial images for further improvement. Our key is to encode generative priors learned by a style-based texture generator on unconstrained data for fast and detail-preserving face reconstruction. With our texture generator pre-trained using a differentiable renderer, faces could be encoded to its latent space as opposed to the time-consuming optimization-based inversion. Our generative prior encoding is further enhanced with a pyramid fusion block for adaptive integration of input spatial information. Extensive experiments show that our method reconstructs photo-realistic facial textures and geometric details with precise identity recovery.

Demo Video

Materials

Citation

@InProceedings{Sun_2023_WACV,

author = {Sun, Yasheng and Lin, Jiangke and Zhou, Hang and Xu, Zhiliang and He, Dongliang and Koike, Hideki},

title = {ReEnFP: Detail-Preserving Face Reconstruction by Encoding Facial Priors},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {6118-6128}

}

}