Sep-Stereo: Visually Guided Stereophonic Audio Generation by Associating Source Separation

Abstract

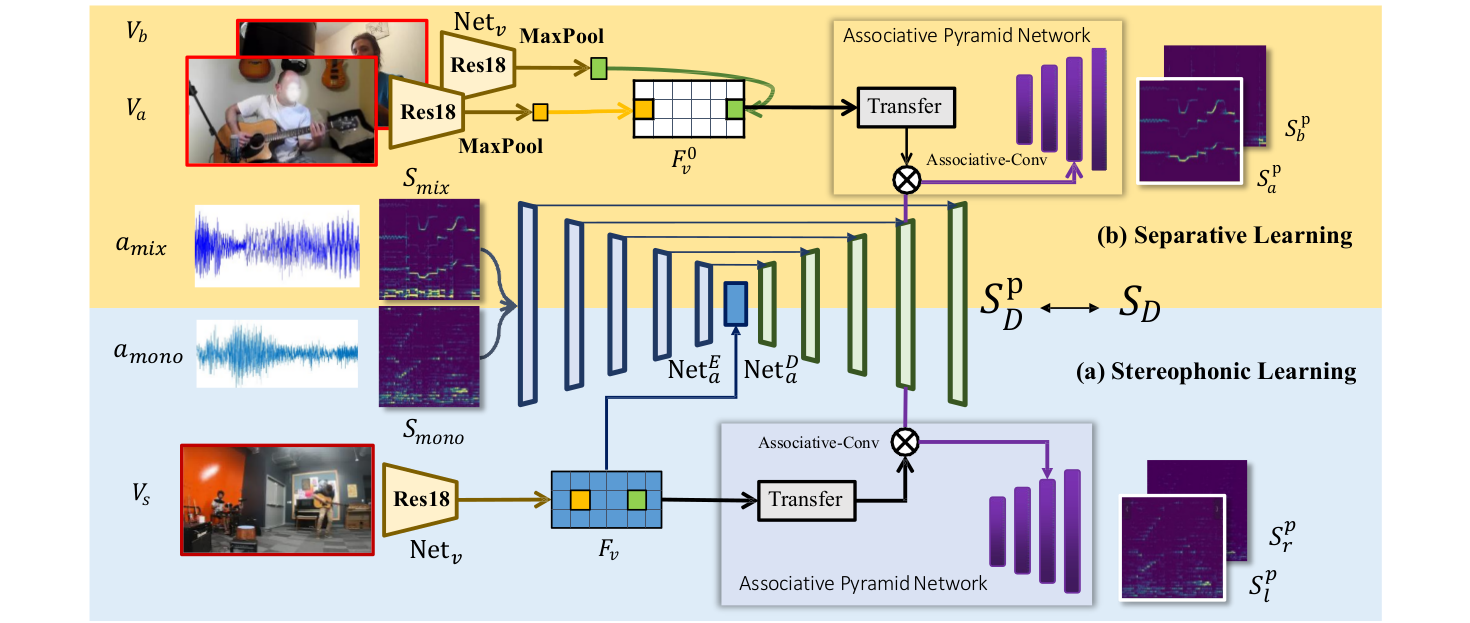

Stereophonic audio is an indispensable ingredient to enhance human auditory experience. Recent research has explored the usage of visual information as guidance to generate binaural or ambisonic audio from mono ones with stereo supervision. However, this fully supervised paradigm suffers from an inherent drawback that the recording of stereophonic audio usually requires delicate devices which are expensive for wide accessibility. To overcome such challenges, we propose to leverage the vastly available mono audio data to facilitate the generation of stereophonic audio. Our key observation is that the task of visually indicated audio separation also maps independent audios to their corresponding visual positions, which shares a similar objective with stereophonic audio generation. We integrate both stereo generation and source separation into a unified framework, , by considering source separation as a particular type of audio spatialization. Specifically, a novel associative pyramid network is carefully designed for audio-visual feature fusion. Extensive experiments demonstrate that our framework can improve the stereophonic audio generation results, while performing accurate sound separation with a single model.

Demo Video

Materials

Code and Models

Citation

@inproceedings{zhou2020sep,

title={Sep-Stereo: Visually Guided Stereophonic Audio Generation by Associating Source Separation},

author={Zhou, Hang and Xu, Xudong and Lin, Dahua and Wang, Xiaogang and Liu, Ziwei},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2020}

}