StyleSwap: Style-Based Generator Empowers

Robust Face Swapping

Abstract

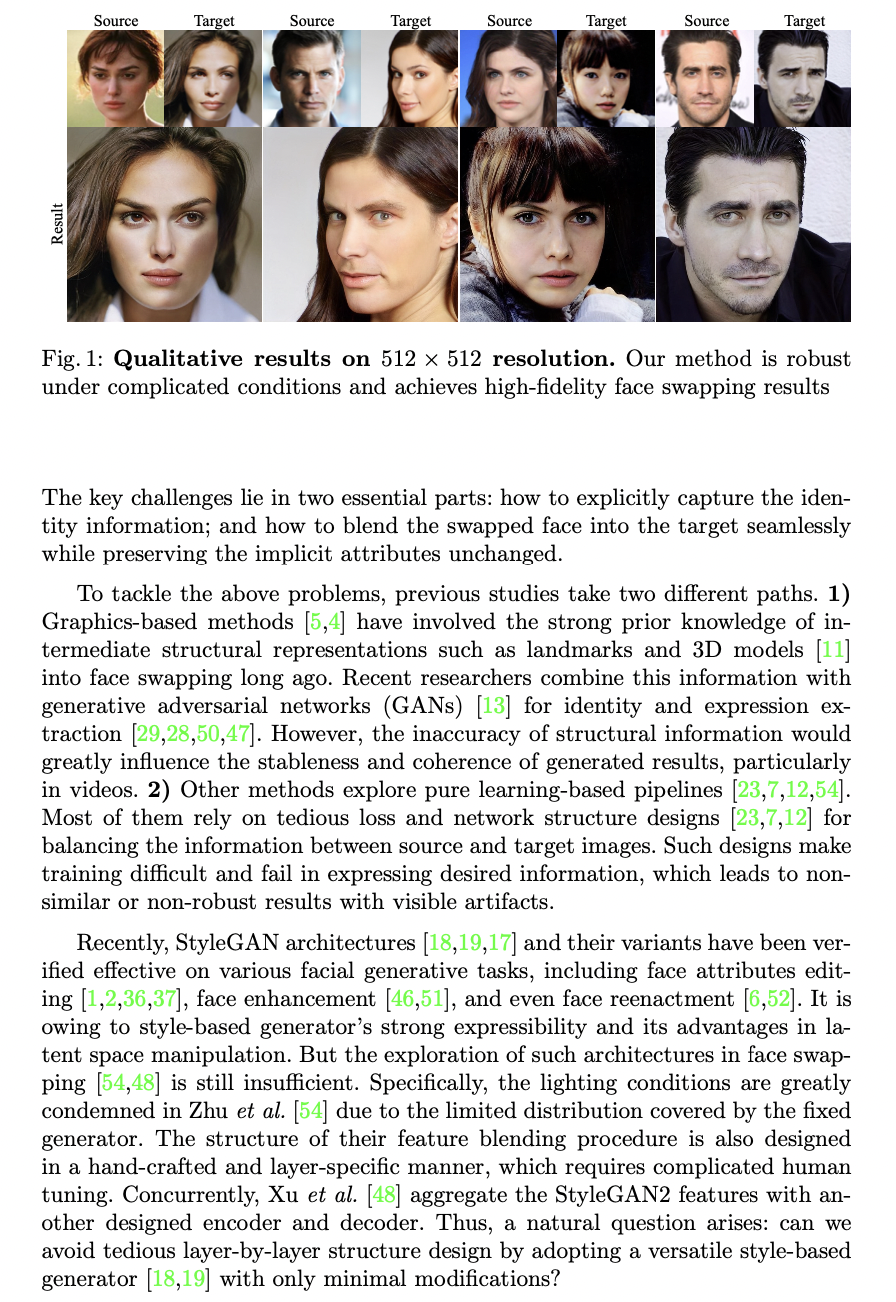

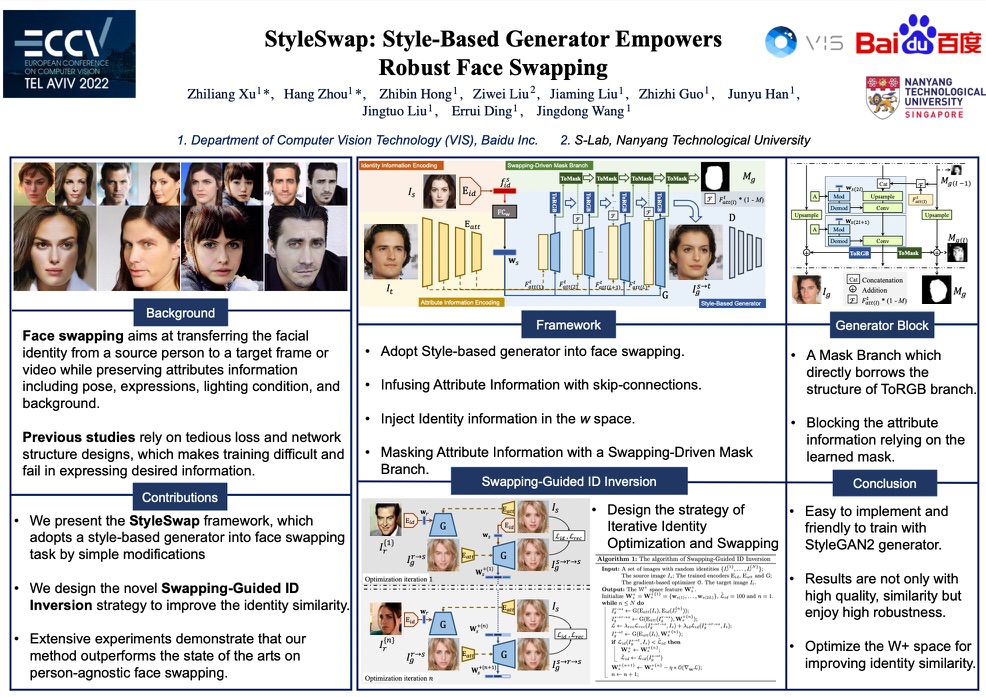

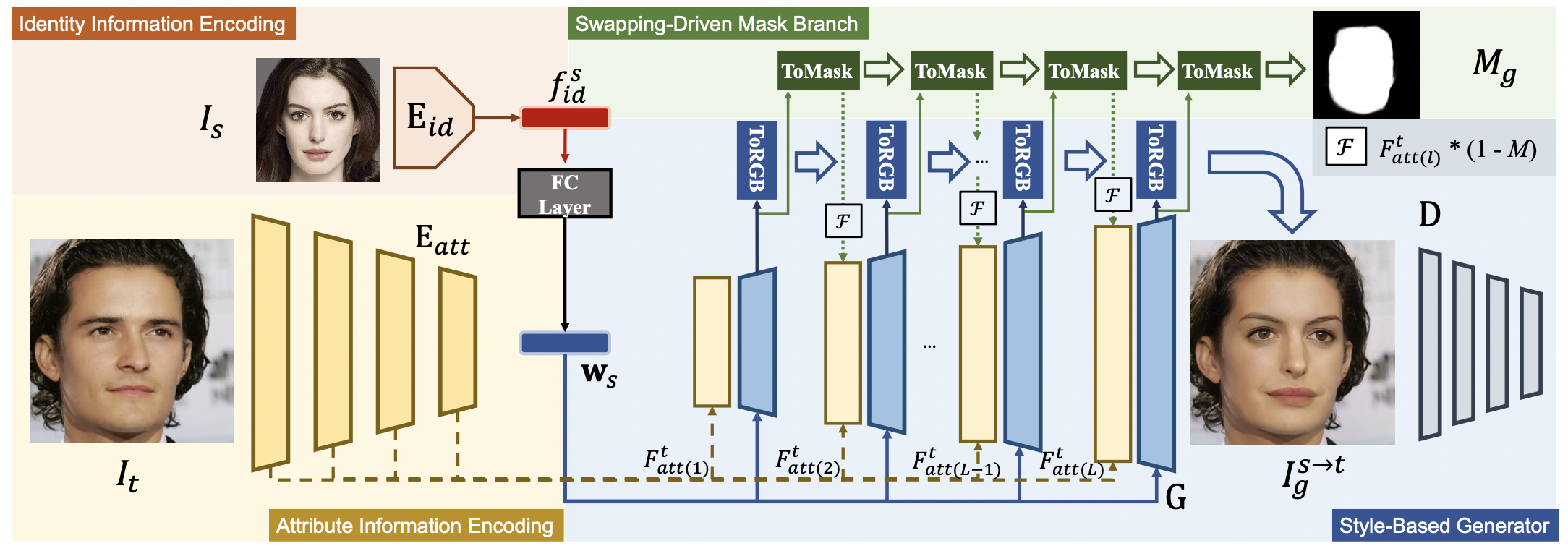

Numerous attempts have been made to the task of personagnostic face swapping given its wide applications. While existing methods mostly rely on tedious network and loss designs, they still struggle in the information balancing between the source and target faces, and tend to produce visible artifacts. In this work, we introduce a concise and effective framework named StyleSwap. Our core idea is to leverage a style-based generator to empower high-fidelity and robust face swapping, thus the generator’s advantage can be adopted for optimizing identity similarity. We identify that with only minimal modifications, a StyleGAN2 architecture can successfully handle the desired information from both source and target. Additionally, inspired by the ToRGB layers, a Swapping-Driven Mask Branch is further devised to improve information blending. Furthermore, the advantage of StyleGAN inversion can be adopted. Particularly, a Swapping-Guided ID Inversion strategy is proposed to optimize identity similarity. Extensive experiments validate that our framework generates high-quality face swapping results that outperform state-of-the-art methods both qualitatively and quantitatively.

Demo Video

Code and Models

Citation

@inproceedings{xu2022styleswap,

title = {StyleSwap: Style-Based Generator Empowers Robust Face Swapping},

author = {Xu, Zhiliang and Zhou, Hang and Hong, Zhibin and Liu, Ziwei and Liu, Jiaming and Guo, Zhizhi and Han, Junyu and Liu, Jingtuo and Ding, Errui and Wang, Jingdong},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

year = {2022}

}